Language Identification (LID)

- ashvineek9

- Oct 26, 2021

- 3 min read

Linear and logistic regression

Linear Regression and Logistic Regression both are supervised Machine Learning algorithms. Linear Regression and Logistic Regression, both the models are parametric regression i.e. both the models use linear equations for predictions The Differences between Linear Regression and Logistic Regression Linear Regression is used to handle regression problems whereas Logistic regression is used to handle classification problems. Linear regression provides a continuous output but Logistic regression provides discreet output. The purpose of Linear Regression is to find the best-fitted line while Logistic regression is one step ahead and fitting the line values to the sigmoid curve. The method for calculating loss function in linear regression is the mean squared error whereas for logistic regression it is maximum likelihood estimation.

Y = mx + c for LR and S(x) = 1/ 1+e^(-x)

Support Vector Machine

It is used for both classification and regression problems. In the case of a binary classification problem, a plane equally dividing the two different groups of data is known as a hyperplane. The distance between the dotted line on both sides of the hyperplane connected to each data category is the margin. The dotted lines are called support vectors. Both of the dotted planes will be parallel to the hyperplane. The hyperplane should be oriented in such a way that the marginal distance is maximum. The SVM can be implemented in 2D or 3D. The SVM kernels help to convert the low dimension to high dimension. The aim of the training is to generate a hyperplane by maximizing the margin.

Pros:

It works really well with a clear margin of separation

It is effective in high-dimensional spaces.

It is effective in cases where the number of dimensions is greater than the number of samples.

It uses a subset of training points in the decision function (called support vectors), so it is also memory efficient.

Cons:

It doesn’t perform well when we have large data set because the required training time is higher

It also doesn’t perform very well, when the data set has more noise i.e. target classes are overlapping

SVM doesn’t directly provide probability estimates, these are calculated using an expensive five-fold cross-validation.

K Nearest Neighbour

KNN is an unsupervised as well as supervised-based machine learning algorithm. Unsupervised KNN is the foundation of manifold learning and spectral clustering algorithm. KNN is used for both classification and regression problems. KNN creates different categories based on similarities. It is known as the lazy learner algorithm. The K-NN working can be explained on the basis of the below algorithm:

Step-1: Select the number K of the neighbors

Step-2: Calculate the Euclidean distance of K number of neighbors

Step-3: Take the K nearest neighbors as per the calculated Euclidean distance.

Step-4: Among these k neighbors, count the number of the data points in each category.

Step-5: Assign the new data points to that category for which the number of the neighbor is maximum.

Step-6: model is ready.

Naive Bayes

Naive Bayes is a supervised learning algorithm. It is mostly used for classification-based problems. It is based on applying Bayes’ theorem. It has a naive independent assumption among the features. It is among the simplest Bayesian network models. MultinomialNB implements the naive Bayes algorithm for multinomially distributed data and is one of the two classic naive Bayes variants used in text classification (where the data are typically represented as word vector counts, although tf-idf vectors are also known to work well in practice). It is fast and easy to work on a large dataset. There are three types of Naive Bayes model under the scikit-learn library: Gaussian, Multinomial, and Bernoulli.

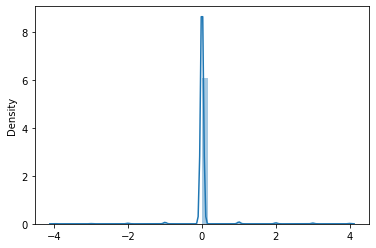

Training results

XGBOOST

Comments